06_2012

+++++++++++++++++++++++++++++++++++++++++++++++++++

Night: Light is an dance show created on june 2nd 2012 at the Ircam in Paris, France. It is a production and commission by Ircam-Centre Pompidou.

Ensemble Alternance

Alban Richard – dance

Jean-Luc Menet – flute

Jean-Marie Cottet – piano

Alexandra Greffin – violin

Frédéric Baldassare – cello

Alban Richard – choreography

Olivier Pasquet – live electronics

Raphael Cendo – instrumentals

Maxime Le Saux – sound engineer

Martha Moore – choreographic assistant

Nathalie Schulmann – body consultant

Corine Petitpierre – clothes design

Walt Whitman – libretto (1855)

sociologic conception of concert

Traditional concept of classical music concert barely changed over the first decade of the XXIst century. In the previous century, the 1960s and 1970s were very prolific years for concentrating on original concert setups. People like Cornelius Cardew or Tery Riley composed pieces that socially involved the audience in both compositional and performance processes. These two merged processes were in many ways giving a political dimension to their music. The human aspect was also important for Lou Harrison who contributed to a re-discovery of non-western music. Beyond pure musical aspects, his pieces for Bali’s gamelans opened the concert to the outside world. People like Harry Partch did have a similar approach not with people but with a peculiar instrumentarium he could share with both musicians and the audience. His microtonal instruments would add a unique visual component to the concert changing it into an event between theatre, installation work, and concert. Nowadays people like Nicolas Frize or François Regis have a similar human approach where a concert is not only the presentation of a piece of music but also a social event in which the audience is part of all musical components such as composition, playing, sharing and listening. This radically changes the perception of the concert. Here we talk about real interactive art in which visitors and their surroundings are active in both directions.

building and architectural design

It is a question of duality between the inside and the outside. I will not write about Immanuel Kant in this article but the music concert concept has a lot to do with transcendental idealism. Physiologic perception, cultural background, and interests are clearly filters for listeners and it is then all about belief. Part of the art from composers is to make people believe and proceed in guided dreams. This is why there is no necessity and quest for truth in music and nothing to prove during concerts. Composers often make the mistake of failing to prove something. Inside and outside duality is not only between pure music and listeners as unique beings. It is also between composers and instrumentalists, between players and audience, between each individual listener, between the piece and the society, between backgrounds, between writing and interpretation, between now and earlier, and between expectations. Those several dualities are fields in the same meaning as Pierre Bourdieu describes it. Fields build a complex sharing network between components whose importance differs from one concert to another. They can sometimes be voluntary and controlled but this is not always the case. In any case, they cannot be totally controlled. Creators believing they control everything in their piece forget it is all just an interesting utopia but nothing else. The writing can be as precise and general as possible, one will never take over the listener’s independence; for any kind of music or any kind of event. This is even the case for live generative music played in darkness on headphones with no audience. Once the piece is considered to be ready for a performance, it is spread onto the complexities of the real world. It is somehow infinitely copied. Only one copy belongs to its creators and it is the only one they think they have control. A piece can be considered as the catalyst, and sometimes the creator, of new states in the real world (affects on the audience, physical sound pressure, etc).

Time and space both have a major role in the architecture of that complex sharing network. Architecture and design of real physical forms are also taking part in that network; it is an important part of the compositional process of performance. Many parallels can be made between the networked architecture described earlier and real spatial architecture. Several conceptions are taking part:

First of all, the outside of the building defines and sometimes rules the way people will consider the show. You just have to go to the Berliner Philharmonie or Tokyo Opera City on a Saturday night to understand the influence the image of the building has on people and their way of attending an event. Derrick May often describes the birth and the spreading of House Music in the late 80’s from Chicago clubs like the Warehouse or Music Institute. The inside atmosphere of these clubs changed after a while when people knew there were in a historic place; resonance from the outside comes socially.

Then, the inside of the building, including a stage if there is one, has a role in both the view and the acoustics offered to the audience. Sounds and sights of the inside of the building are part of the performance; also often part of the instrumentarium. There are two famous examples in western culture: medieval music and organ music. The audience is inside a religious public space and this space is the instrument’s body. Many sound artists are simply using space as a resonator; for instance Carl Schilde in his Wow project in 2012.

|

|

composing space

Architecture is socially important so involving it in a sound art piece is also consequently significant. The most obvious architectural importance is the stage and its surroundings; the inside architecture. This is the case for most installation works in museums or stage design in music theatre or opera. Composers often write music by taking space into account. Instrumentalists always play music knowing they are in a certain space and building. For instance, they make slow music in large reverberated spaces. Another example is the position of musicians in the building. Position and reverberation become a beautiful and convenient characteristic as well as other constraints. The way instruments are set up in an orchestra is precisely set to get the widest possible sound. Space and building possibly become compositional dimensions as important as any other one such as pitch, duration, or dynamics. Composers have several alternatives to this: One has the choice to passively use it. In this case, the piece does not take care of positions, movements, and other spatial characteristics. One has the choice to radically avoid it by using headphones, virtual reality systems, darkness or reverberation cancelling techniques. Finally, one can voluntarily use it. In that case, it can be done passively or actively. A passive way would not move over time so spatial compositional work is not changed. This is the case with natural reverberation, stage design, and other musical constraints taking care of sonic space and physical space. An active way would allow the spatial component to be dynamic over time.

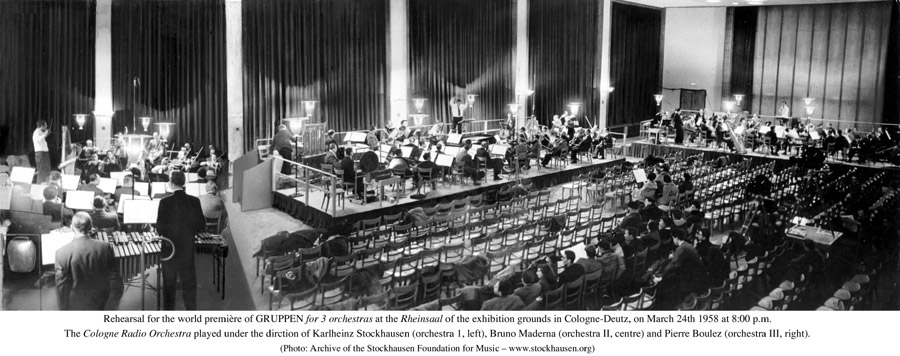

In that case, we are dealing with time-based art in which space passively becomes dynamic. The overall of a piece changes over time as well as its surroundings. This case raises questions about voluntarily linking space and time together; composing music from that. I was explaining earlier about participative pieces or works in which musicians and the audience are moving during the performance. Some people rather think about only sounds moving around the audience. For instance usual classical music pieces like Karlheinz Stockhausen‘s Gruppen, Iannis Xenakis‘s Metastaseis or Luciano Berio‘s La Vera Storia are good examples like many others. The ultimate spatialization consists of only using amplification with electrically controlled spatialization.

electric spatialization

Early researches from GRM, Birmingham University’s BEAST and many other electronic music studios around the world worked a lot on space composition as soon as it became possible to use more than zero speaker. Since audio control is electric and precise, it is possible to tighten and include space and position with all other available compositional dimensions. Arrays of speakers and real-time effects become powerful tools for conceptualizing, creating, and performing. A piece like Pierre Boulez‘s Repons is a good example. Lichtung pieces for ensemble from Emmanuel Nunes, Perspectivae Sintagma or Robert Henke‘s work are more subtle examples in term of spatial composition.

Electronic spatialization made from electric amplifications and transformations has become very popular because it is somehow easy to make links between music’s abstraction and more concrete and physical subjects. Those links are often pure fantasy or only conceptual. There is nothing bad about it. But people should continue dreaming and conceptualizing only if they are aware their concept is just pure abstraction. Composers often get lost with concepts forgetting about audio and visual perceptions and their physiologic constraints. Beyond that, the frontier between belief and perception is amazingly interesting. To make it simple, I think it is the most interesting part of work in all arts. Media artists like Granular Synthesis or Bill Viola use this idea in their installation pieces. They both physiologically control the perception of time. This is also what happens in clubs. People are dancing all night long with very good sound quality and high-quality music. After a while, nobody knows if the quality and pleasure come from the actual place or themselves.

When Thomas Edison or Charles Cros played sounds from their early machines, people truly believed it was the ultimate sound quality and only very little had to be done to make sounds completely realistic. Excepted the fact we are less romantic and we care less about reality and sound quality, nothing changed at the beginning of the XXIst century. Sound still comes out from a piece of cardboard although there has been huge progress. Audio spatialization specialists have tried to make the gap between belief and perception closer. They made the gap between thought and truth thinner. They would ultimately succeed by finally turning composers’ pure concepts into real ones. I believe they will always fail and eventually give even more ideas to those preferring to only conceptualize their piece rather than expose it to the outside world. Therefore, I think the work for spatialization specialists is worth doing for both kinds of creators; very basically those for whom the piece is the score and those for whom the piece is about sound efficiency.

espro wfs system with one speaker array in the rear

espro wfs system with one speaker array in the rearmodulor for spatial composition and perception

Size of space seems very important to me. Espro is a 350 to 400-seat hall and it seems to be the “proper” anthropometric size and ratio. It is not a question of human size in the same way we would think in architecture. It is rather a question of human hearing bandwidth, loudness limit, and mostly a question of the ratio between the number of speakers and the distance between each other. In other words, it is a question of the ratio between the human hearing physiologic and the acoustic characteristics of the hall. Could there be a Le Corbusier‘s Modulor for sound spatialization? I believe so! From my personal experience, I actually feel sizes, their ratios, and the amount of speakers have an optimal correspondence. There could maybe be some golden ratio explained by our hearing physiology and our psychological understanding of space. Here again, architecture has thus an important role. I would freely define halls as optimal if they have 1300 seats, 400, 20, or 1 with headphones. These sizes are subjectively sensational but still very important. Nevertheless, speed of panning, spatial articulation, and dynamics are also very important factors for space and perception of building. In other words, time is another decisive factor. Dance theorists widely explain it by stating gestural articulation is not only linked with speed and intensity of movement but also with the size of the space in which it is done. That can also be the distance from which someone watches the dancer. When a sound arrives toward the sweet spot, both loudness and pitch will increase (doppler effect). Loudness, glissando, and filtering define spatial articulation. Inversely, movements make the sound change over time so some sonic articulations are closely linked with the movement and placement of the listener. I often change the gain curve and de-pitch over space in order to increase or decrease both the sensation of movement and sonic articulations. This is not a scientific way of doing and softwares like Spat does not provide the option of building a non-natural gain curve over distance. Doing so, I denaturate the use of such software by avoiding the logic of centered sweet spot. I am able to change spatial parameters depending on the hall where I play the music. This is the reason why when using reverb for just reverberation, I do it in real-time in order to be able to tweak spatial controls in the hall itself rather than in the studio beforehand. Also, when using my envelope spatialization system, the larger the room, the shorter the envelopes will be. For the same reasons in pure electronic music (including dance music), I change the resonance frequency of the synthesis that generates basses. It depends on the resonance frequency, so the size of the hall. There is no doubt size is important for music. This is why techno cathedrals like Berghain‘s Panorama Bar or e-Werk are great places for listening to sound and music with clean power and precise basses.

I also avoid the question of sweet-spot when doing the electronics for Night Light. First, I started working in the smaller Ambisonic studio at Ircam and I sometimes listened to sounds by looking up at the ceiling rather normally watching horizontally. Sound position is much better heard in the front than the rear of the head. When listening by looking upward, the entire Ambisonic system is located in the front. This gives the impression of looking at a sonic painting rather than being immersed in a more traditional way. This way all speakers are equally heard even though the Ambisonic concept is not properly used anymore.

I tried to use different reverberations in each corner of a large space in many other pieces. For instance, visitors of an installation could theoretically walk from one reverberated space to another in the same hall. The effect is a sonic version of Olafur Eliasson‘s Din Blinde Passager who plays with colorful degrades of smoke. One sound source could also cross a reverberated slice of the hall. I could barely get proper results even with a relatively large amount of speakers. I eventually succeeded in doing it by mixing several parallel Ambisonic systems in Espro. Also, it is possible to make interesting non-continuous sonic environments by hacking a little Ambisonic’s spherical harmonics. This way, when listening carefully in the darkness, this system allows spacial characteristics to change over time but also over space: A single source continuously traveling into the hall changes its diffusion depending on its path.

a part of the light and speakers set up for Night: Light

a part of the light and speakers set up for Night: Light

3d spatialization and reverberation

New spatialization systems and ideas raised over the past century within this context. They are now many: vbap, dbap, binaural, transaural, ms, ab, ambisonic, wave field synthesis etc. Ircam has been developing a software spatialization system called Spat in 1994. It first ran on ISPW‘s Max FTS. This piece of software is basically using powerful ways to place sound sources on a virtual space. The Acoustic and Cognitive Spaces team, with Olivier Warusfel and wizards Markus Noisternig and Thibault Carpentier are still making huge progresses on it. As expected when using virtual spaces in concert situations, this system is only theoretical but it is pretty good. Its reverb is one of the best I have heard until now with Lexicon ones.

This tool works in a rather scientific way. The reverb algorithm sounds the way it should scientifically sound and the position of sources is calculated in a canonic way. Some other competitors reverberation techniques are cheating and use efficient tricks like flanger at the output to get a dense sound. Spat reverb is nevertheless sometimes important for “proper” spatialization needed for virtual reality applications and games. It is another story in a hall with its acoustic particularity and visual constraints. In some cases, setting a source at a precise position does mean nothing both for the audience and the music. Position difference is sometimes more important. The difference of difference (acceleration) is important in other cases. This is particularly the case with a deaf audience where nobody is sitting in the same place. I often avoid the question using envelope spatialization in my work. That way, I often prefer using position difference when position itself does not matter.

Several audio diffusion systems have been created to avoid the question of audience placement and the speaker’s position. The most efficient until now is still a binaural system using headphones. It does not have to take care of the audiovisual constrains I described earlier. Other attempts are made with techniques people use to call 3d audio.

wfs and ambisonic (hoa) at ircam

Wave Field Synthesis is a sound reproduction technique using loudspeaker arrays that redefines the limits set by conventional techniques (stereo, 5.1 …). These techniques rely on stereophonic principles allowing the creation of an acoustical illusion (as opposed to an optical illusion) over a very small area in the center of the loudspeaker setup, generally referred to as a “sweet spot”. WFS, on the other hand, aims at reproducing the true physical attributes of a given sound field over an extended area of the listening room. It is based on Christiaan Huyghens‘ principle (1678) which states that the propagation of a wave through a medium can be formulated by adding the contributions of all of the secondary sources positioned along a wavefront. Ircam recently installed a double system for double use. A Wave Field Synthesis and an Ambisonic system for the Espro (espace de projection) concert hall. This hall has a variable acoustic controlled by turning panels. The system can be used by both researchers and musicians. A total of 350+ speakers are used in Espro. This great number reproduces virtual spaces with much better definition than fewer perceivable and discrete speakers. In addition, the production department bought a 25 speakers ambisonic system for one of its studios. This extra facility avoids schedule pressure and gives more freedom to experiment in a proper environment.

espro ambisonic – speakers are the white boxes on the wall and the ceiling

espro ambisonic – speakers are the white boxes on the wall and the ceiling

Ambisonic is a surround sound system first developed in the 1970s. Its main difference from other surround techniques is that it separates transmission channels from speaker feeds, the speaker feeds being derived using a decoder situated in the living room. Decoders can be implemented in either hardware or software. Typically more speakers are used than transmission channels, and the more speakers used then the more stable the resulting sound-field. Speakers can be arranged in a number of configurations, regular polygons being the most popular.

Espro concert hall is a perfect place for systems like Ambisonic and WFS except for the fact there is asbestos in its walls. It is planned to be removed in 2013. It is physically possible to change its acoustics and the space is visually neutral enough that one can almost do whatever wanted. The software for controlling the entire sound diffusion system is the same Max library used for other traditional pieces: Spat. It theoretically can use high-order ambisonic encoders and decoders; up to 120. Maximum order is in practice 9. We are talking about HOA; Higher Order Ambisonic. It is in 2012 the hall with the highest Ambisonic order. The higher the order, the higher is the highest possible frequency.

I explained before Spat is using the concept of source placement. Sources evolve in a virtual acoustic space so it only has a meaning in some restrictive situations. Composer Rama Godfried (CNMAT, Berkeley) got invited at Ircam to write a piece for the inauguration of this new audio system. He did an excellent work getting around the virtual source question while keeping a proper perception of space. He used a large amount of grouped sources and controlled them with spatial constraints. He did what we all do with multi-agent systems and other flokers. But this time, I personally never heard such precision on any other projects in which I used such techniques.

|

|

Alban Richard’s face expression and Goya’s Black Painting

a setup for the dance piece

The experiments I did with the actual system proved once again Ambisonic was good for surround ambiance but way too smooth for moving sounds. Traditional panning is much better for dynamic content. Ambisonic uses the idea of reproducing a sound environment recorded from a sphere or circle of microphones. It is then easy to realize it will work less efficiently than discrete panning because all speakers are always playing sound wherever the source is. The question of using this system in a classical music environment such as Ircam music is not new. The question is raised here once again. Spatialization often has existential problems with the “old style” traditional concert setups like the ones often used for mixed music. What is the point of making sounds move around an audience when the stage is in front and when the audio-visual intention is nothing more than playing musicians? The worst case happens when a solo amplified instrument is unintentionally heard in the rear and seen in the front. The question of creating an artificial acoustic environment with ambisonic is not easy to argue either.

Both Ambisonic and WFS systems use relatively small speakers. The Ircam system is made of Amadeus speakers. The smaller they are, the closer they will be one from another, the most precise will be interferences, the flattest will be waves, and the most precise will be holophonic positions. Unfortunately, small sizes of speakers do not play basses. It is not a problem when only voices are played. Otherwise, sub-woofers (the least possible location feeling) are needed. For Night Light, I also collaborated with the excellent sound engineer Maxime Le Saux. He had the idea to add extra D&B speakers and subs in a peculiar way. Two huge grapes of speakers are added in the center of the hall; in the center of the Ambisonic sphere. Each speaker is directed toward a different direction in order to use various directivities and reflections from the walls. When sounds are alternatively sent to each speaker very quickly, the audience hears radical changes in space. The setup is visually impressive. Also, the placement of listeners does not matter because they hear something completely different according to their seat. Seats are placed along Espro’s walls and dancer Alban Richard can play in the center of the hall. This is not the optimal disposition for Ambisonic but it is a compromise taking dance and light into account. Alban’s place is basically the best one but that does not really matter if seat position is not a unique question. In Espro, the Ambisonic speaker sphere needs to be a little too high from the floor. This allows everybody to hear all speakers wherever they are seated. This is not convenient for Night Light because the audience is sitting on chairs on the floor. So extra speakers are added under the seats bringing down the sonic space toward the floor where the dance is happening. This is also a good opportunity to do panning spatialization on the floor following Alban Richard’s displacements.

Ambisonic is then only used for a quasi-fixed ambiance surround the environment. It is not used for important movements. I decided to create movements only to make the environment richer and not for discrete displacements. There is no intention to hear real movements. For instance, reverberation is done using Ambisonic.

It is the occasion to compose with three different concepts at a time: Ambisonic for atmospheric sounds, panning for dynamic moving sounds and my envelope system for sonic constellations. The entire machine is programmed in a way it can smoothly go from one concept to another.

smoke machine for preparing the space before the entrance of the public

smoke machine for preparing the space before the entrance of the public

saturationism style

Raphael Cendo chose a piece he already composed: Rokh. The piece was initially written for strings and prepared for piano. The preparation of the piano gives a good example of the richness and interesting density of the piece. Raphael is pretty good at doing pieces that sound like gigantic rocks with perceived macroscopic form. The piece has three movements and explores a wide amount of sonic materials that are heard in each of the three sections. The aspect of the life cycle, death, and resurrection is omnipresent in the entire piece. It is full of brutal changes, long static phases, dense rustling, and violent events like subtle life returns. The style is somehow close to Kasper Toeplitz or Merzbow and I guess he would love Zbigniew Karkovsky‘s music and personality. Raphael is part of the group of composers who, in the mid-2000s, declared they were doing saturation or distortion music with instruments. I completely disagree with this appellation even though my disagreement has nothing to do with the music quality. Many institutions, and especially Ircam, protect composers’ status to a point where they are so confident they can announce a new movement based on purely technical characteristics. Both composers and Ircam use what they define as “technical” the way they want depending on the situation. When I first discovered “saturationism” I first thought it was a joke but I then understood it was a real mediatic technique. Although I guess these composers now got more subtle and stopped using this marketing mechanism, I am open to read or listen proper arguments demonstrating it is not just an old musical trick. Dror Feiler did that long ago and he did not ask for any paternity. It would be interesting to compare the politics of this question with what happened to spectral music in the mid-80s.

Since the acoustic music part is rather strong and intense, it is decided to make the entire show intense too. Alban Richard’s dance and the light design are then being exquisite too. The audience is very close to the soloist dancer and the dancer’s face and emotions can easily be seen. I can see Goya’s Saturn Devouring His Son in Alban’s facial expressions. It sometimes looks like Munch’s Cry or Castellucci being bitten by dogs in his Dante Inferno ‘s piece. Valérie Sigward‘s lighting uses raw light material like the ones used on construction sites. This lighting is very powerful. It also takes time to reach full brightness and its final color temperature.

where Alban dances – musicians are on the left side wall behind a glass window

where Alban dances – musicians are on the left side wall behind a glass window

suitable musical content

Alban reads a text from poet Walt Whitman called Leaves Of Grass (1855). Following Raphael Cendo’s instrumental part, I destroy both his voice and the text until it slowly becomes impossible to understand what he says. At one particularly impressive moment of the piece, his reading gradually becomes noisy until it completely fills the hall after large and faster movements. This strong unique moment in the piece shows how weak are humans against this huge powerful machinery of speakers.

The ensemble plays in a studio separated from Espro by a huge and heavy window glass. The audience sees them playing but cannot directly hear them. I was talking earlier about issues concerning the placement of acoustic instruments and electronic; the question simply and radically disappears here since they are acoustically dissociated. Asking musicians to play in another room is also conceptually interesting and offers many possibilities to the dancer’s space. Synchronization between musicians, electronics, and the dancer was made using networked Max patches on laptops.

It is a very good occasion to be radical. So I only concentrated on spatializized amplification with nothing acoustic nor electronic. Although the piece contains non-interesting interlude sound files, the composition only concentrates on the movement of real amplified instrumental notes and acoustic effects coming from behind the glass. It is all about space writing.

Alban dancing and one computer screen

Alban dancing and one computer screen

spatial composition

The remaining question is how do I compose space controls of the piece. The sharpest and brightest sounds are, the better they are located in time and space. I have followed this postulate for most of my audio and visual work since 2010. This way, I can tightly compose elements at the very limits of perception and enhance the brain’s ability to understand complexity. It is also an amazing possibility for writing maximalistic content inside a minimalistic construction: I am currently concentrating a lot on what I call the squeletic structure of music: rhythm. On one hand, the fact elements are tiny automatically gives a minimal aesthetic to the piece. But since a minimalist structure contains another structure, the aesthetics becomes more maximalist only when reading the piece from a closer point of view. I have become inspired by scale relativity. I will not explain precisely the idea here. But one could define this kind of fractal concept as quantic, microscopic or even nanoscopic. I talk here about nanoscales because I think it is interesting to compose nature, material, and music in the same exact way using nanotechnology theories. They are not new since many musical techniques such as counterpoint are very close to the building of nano-materials. Curtis Roads is a virtuosos of granular synthesis. In his research using Gabor‘s style Microsounds, he explores the technique so deep it becomes musical and so singular it sounds different from usual 1990’s granular synthesis sound. Other composers like John Oswald aka Plunderphonics, or Girls Talk work with similar technics of manual or automatic concatenation. I am personally trying to go in these paths in another direction by formalizing it or controlling it in a parametric and constructivist way.

Using my jtol library and my envelope spatialization system, sounds can be as close as possible from each other and as short as they can be. As a consequence, I only play with sonic structures and much less with timbre in a traditional way. But it becomes even more interesting when timbres can be noticeable and movements get continuous whenever events are spatially and timely close enough. Also, playing short sounds leaves room for silence. Those become as important as sounds and offer the opportunity to compose in a negative way using “perforations”.